Language Modeling ( LLM )

* What is Language Modeling :

Language modeling powers modern NLP by predicting words based on context, using statistical analysis of vast text data. It's essential for tasks like word prediction and speech development, driving innovation in understanding human language.

Types of language models :

N-gram Models : These models predict the next word based on the preceding n-1 words, where n is the order of the model. For example, a trigram model (n=3) predicts the next word using the two preceding words.

N-gram Language Model Example : Consider a simple corpus of text:

I love hiking in the mountains.

The mountains are beautiful.

Trigram Model:

P("in" | "hiking", "love") = Count("hiking love in") / Count("hiking love")

P("are" | "mountains", "the") = Count("mountains the are") / Count("mountains the")

Neural Language Models: Neural network-based language models, such as recurrent neural networks (RNNs) and transformer models like GPT (Generative Pre-trained Transformer), use deep learning techniques to capture complex language patterns. These models can handle long-range dependencies and generate more fluent and coherent text compared to n-gram models.

*What are the applications if we can model the language :

Modeling language opens up a wide array of applications across different fields. Here are some key applications.

Machine Translation : Language models enable accurate translation between languages, facilitating global communication and collaboration. For example, Google Translate uses advanced language models to provide real-time translations.

Sentiment Analysis : Language models can analyze text to determine sentiment, helping businesses gauge customer opinions, reviews, and social media sentiment towards their products or services.

Chatbots and Virtual Assistants : Language models power chatbots and virtual assistants by understanding and generating human-like responses, enhancing customer support, and automating tasks like scheduling appointments or answering FAQs.

Text Summarization : Language models can summarize large volumes of text into concise summaries, aiding in information retrieval, document analysis, and content curation.

Information Extraction : Language models extract structured information from unstructured text, enabling tasks such as named entity recognition, keyphrase extraction, and event extraction.

Search Engines : Language models improve search engine capabilities by understanding user queries, enhancing search relevance, and supporting features like autocomplete and natural language search.

Content Generation : Language models generate diverse content, including articles, stories, poetry, code snippets, and more, benefiting content creators, marketers, and educators.

Speech Recognition : Language models assist in speech recognition systems by transcribing spoken language into text, enabling applications like virtual assistants, dictation software, and voice-controlled devices.

Language Understanding : Language models aid in understanding and generating natural language, supporting tasks such as question answering, dialogue systems, and language generation.

Personalization : Language models power personalized recommendations, advertisements, and user experiences by analyzing user behavior, preferences, and context.

* How do you model a language :

Statistical Language Models :

N-gram Models : These models predict the probability of a word based on the previous n-1 words. For example, a trigram model predicts the next word using the two preceding words.

Hidden Markov Models (HMMs) : HMMs model sequences of words as hidden states, with probabilities assigned to transitions between states and emissions of words from states.

Neural Language Models :

Recurrent Neural Networks (RNNs) : RNNs process sequences of words and maintain a hidden state that captures contextual information. They are trained to predict the next word in a sequence based on the preceding words.

Long Short-Term Memory (LSTM) Networks : LSTMs are a type of RNN designed to better capture long-range dependencies and mitigate the vanishing gradient problem.

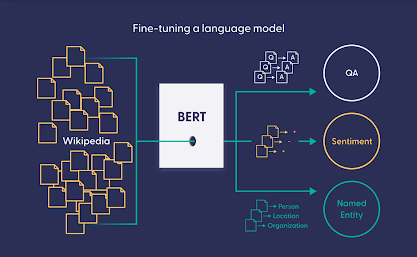

Transformer Models : Transformer models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), use attention mechanisms to learn contextual representations of words and generate text.

Training Process :

Data Collection : Language models are trained on large corpora of text data, which can include books, articles, websites, and other sources.

Tokenization: Text data is tokenized into words or subword units, such as tokens or characters, for processing by the model.

Embeddings : Words are often represented as dense vectors (word embeddings) that capture semantic and syntactic relationships. Pre-trained embeddings like Word2Vec or GloVe are commonly used.

Model Training : The language model is trained using the data to learn the statistical patterns and relationships between words. This involves optimizing parameters to minimize the model's prediction error.

Evaluation : Language models are evaluated based on metrics such as perplexity (for statistical models) or accuracy and fluency (for neural models) to assess their performance.

Inference and Generation :

* Once trained, language models can be used for tasks like text generation, sentiment analysis, machine translation, and more.

* During inference, the model predicts the next word or sequence of words based on the context provided, using probabilistic or deterministic methods depending on the model type.

Comments

Post a Comment